Table of Contents

Important

For more information on MySQL Load Balancer, including how to be

included in the beta programme, contact

<[email protected]>.

The MySQL Load Balancer is an application that communicates with one or more MySQL servers and provides connectivity to those servers for multiple clients. The MySQL Load Balancer is logically placed between the clients and the MySQL server; instead of clients connecting directly to each MySQL server, all clients connect to the MySQL Load Balancer, and the MySQL Load Balancer forwards the connection on to one of the MySQL servers.

The initial release of the MySQL Load Balancer provides read-only load balancing over a number of MySQL servers. Initially, you populate the MySQL Load Balancer configuration with the list of available MySQL servers to use when distributing work. The MySQL Load Balancer automatically and evenly distributes connections from clients to each server. Distribution is handled by a simple count for the number connections distributed to each server - new connections are automatically sent to the server with the lowest count.

When used in combination with a replication setup, the MySQL Load Balancer also monitors the replication status. The master and slaves within the replication setup are monitored and additional decisions about the routing of incoming connections to MySQL servers are made based on the replication status:

If MySQL Load Balancer identifies that the slave is lagging behind the master for it's replication threads, then the slave is automatically taken out of the list of available servers. Work will therefore be distributed to other MySQL servers within the slave replication group.

If the replication thread on a slave is identified as no longer running, the slave is also automatically removed from the list of available servers.

If either situation changes, such as the replication delay decreases to an acceptable level, or the replication thread on the failed slave is restarted and the replication process catches up, then the slave will be brought back in to the list of available MySQL servers.

The MySQL Load Balancer is based on the MySQL Proxy, and consists of two modules which work together to achieve its goal:

The proxy, which uses Lua scripts to customize the handling of connections and query execution. The `proxy` connects to several backend MySQL instances to which it can send queries.

The monitor plugin connects to each of the backends the proxy knows about and executes queries on each one in regular intervals. The results of those queries are used to determine the state of each backend.

For more information on MySQL Proxy, see Chapter 17, MySQL Proxy.

Important

For more information on MySQL Load Balancer, including how to be

included in the beta programme, contact

<[email protected]>.

MySQL Load Balancer is provided as a TAR/GZipped package. To install, extract the package:

$ gzip -cd load-balancer mysql-load-balancer-0.7.0-438-linux-fc4-x86-32bit.tar.gz | tar xf -

The standard package contents are organized into four directories:

/bin /lib /sbin /share

The bin contains wrapper scripts around the

dynamically linked binaries in sbin. The

lib directory contains the required

libraries, and the share directory contains

the scripts and support files used by the MySQL Load Balancer

during execution.

You can run MySQL Load Balancer directly from this directory, or

you can copy the contents to a a global directory, such as

/usr/local:

$ cp -R * /usr/local/

Important

For more information on MySQL Load Balancer, including how to be

included in the beta programme, contact

<[email protected]>.

The easiest way to understand MySQL Load Balancer is to look at a typical example of how MySQL Load Balancer can be used to improve the distribution of work to multiple MySQL servers.

Given an existing setup of several replicating MySQL servers, you can set up the MySQL Load Balancer to provide you with replication-aware load distribution.

Suppose you have three slaves replicating from one master, the slaves running on the machines slave-1, slave-2, and slave-3, the master being on master-1. Each MySQL server listens on the default port of 3306.

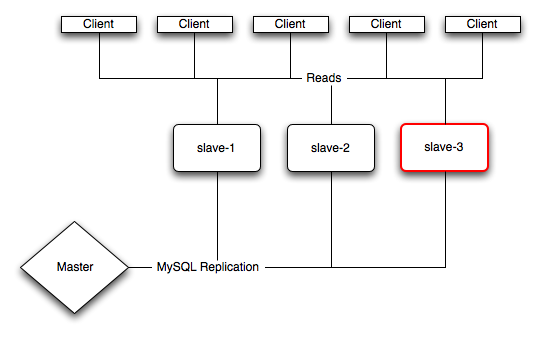

For client connectivity, typical configurations are in one of two topologies. The first topology uses applications that are aware of multiple clients and choose a MySQL server based either on a random selection or by choosing a slave based on a known quantity, such as user ID.

In this scenario, it is possible for a client application to choose a slave that is unavailable, or in a replication situation, a slave that is not up to date compared to the master, or lagging behind the master in terms of processing replication data such that queries accessing the information would fail to return data, or return data that was out of date. In all these cases, the client would be unable to determine the issue (without checking the situation itself). In the event of a failed server, the connection would timeout and another server could be chosen, but the delay could cause problems in the application.

In this scenario, it is also possible for a single MySQL server to become overloaded with requests. For example, if the application was using an ID-based decision model to choose a MySQL server, then a high number of requests for a given ID could produce a very high load on the chosen server. This could affect the replication thread and place the server further behind compared to the master.

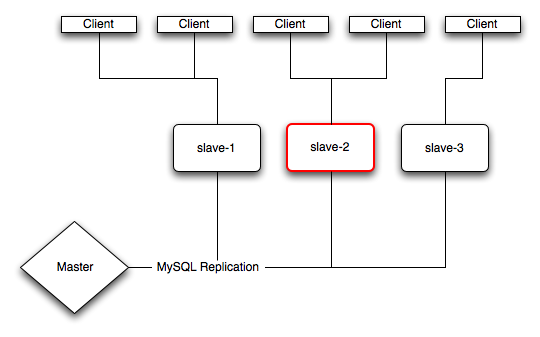

The second topology uses a model where each client has a dedicated MySQL server.

In this scenario, a problem with the MySQL server for an individual client could render the client useless. If the MySQL server is significantly behind the master, you would get out of date or incorrect information. If the MySQL server has failed, the client will be unable to access any information.

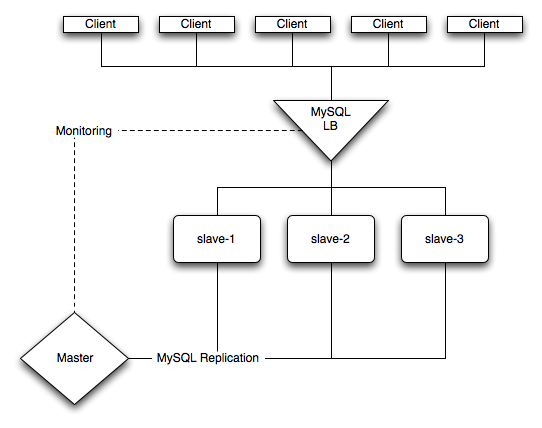

Using the MySQL Load Balancer, you can replace the individual connections from the clients to the slaves and instead route the connections through the MySQL Load Balancer. This will distribute the requests over the individual slave servers, automatically taking account of the load, and accounting for problems or delays in the replication of the data from the master.

In the scenario using MySQL Load Balancer, any failure of a single MySQL server automatically removes it from the pool of available servers and distributes the incoming client connection to one of the other, available, servers. Problems with replication are addressed in the same way, redirecting the connection to a server that is up to date with the master. The possibility of overloading a single MySQL server should also be reduced, since the connections would be distributed evenly among each server.

To start the MySQL Load Balancer in this scenario you would specify the configuration of the master and slave servers on the command line when starting mysql-lb:

$ bin/mysql-lb --proxy-backend-addresses=master-1 \ --proxy-read-only-backend-addresses=slave-1:3306 \ --proxy-read-only-backend-addresses=slave-2:3306 \ --proxy-read-only-backend-addresses=slave-3:3306 \ --proxy-lua-script=share/mysql-load-balancer/monitored-ro-balance.lua \ --monitor-lua-script=share/mysql-load-balancer/monitor-backends.lua

This will start the load balancer, which listens for incoming

client connections on port 4040. The monitor component will

connect to each backend MySQL server with the MySQL user

monitor and no password, to be able to execute

queries on them. If you do not have a MySQL user with that name or

have a password set for the user, you can specify those using the

options `--monitor-username` and `--monitor-password`.

The options in this example set the following options:

--proxy-backend-addresses– sets the address and port number of the MySQL master server in the replication structure. This is required so that MySQL Load Balancer can monitor the status of the server and replication and use this to compare against the status of the slave servers. In the event of a problem, the information gained will be used to prioritse connections to the slaves according to which slave is the most up to date.--proxy-read-only-backend-addresses– each one of these options sets the address and port number (separated by a colon), of a backend MySQL server. You can specify as many servers as you like on the command line simply by adding further options.--proxy-lua-script– specifies the Lua script that will be used to manage to the distribution of requests.--monitor-lua-script– specifies the Lua script that will be used to monitor the backends.

To get a list of all the available options, run

$ mysql-lb --help-all

Important

For more information on MySQL Load Balancer, including how to be

included in the beta programme, contact

<[email protected]>.

When using the MySQL Load Balancer, you must adapt your application to work with the connections provided by the MySQL Load Balancer interface, rather than directly to MySQL servers. The MySQL Load Balancer supports the same MySQL network protocol - you do not need to change the method that you use to communicate with MySQL. You can continue to use the standard MySQL interface appropriate for your application environment.

On each client, you should configure your application to connect to port 4040 on the machine on which you started the MySQL Load Balancer. All MySQL connections for read queries should be sent to the MySQL Load Balancer connection. When a client connects, the connection is routed by MySQL Load Balancer to an appropriate MySQL server. All subsequent queries on that connection will run be executed on the same backed MySQL server. The backend will not be changed after the connection has been established.

If MySQL Load Balancer identifies an issue with the backend MySQL server, then connections to the backend server are closed. Your application should be adapted so that it can re-open a connection if it closes during execution, re-executing the query again if there is failure. MySQL Load Balancer will then choose a different MySQL server for the new connection.

The thresholds with which the monitor considers a slave to be too

far behind are specified in the

monitor-backends.lua file. By default it

checks for information obtained by SHOW SLAVE

STATUS, namely Seconds_Behind_Master

and tries to calculate the amount of data (in bytes) the slave has

to read from the master. The default values for those metrics are

10 seconds and 10 kilobytes, respectively.

Note

You need to restart the MySQL Load Balancer if you change the

monitor-backends.lua script while it is

running. This is different from MySQL Proxy, which automatically

reloads a script if you modify the script during execution.

The load balancing algorithm is specified in the

monitored-ro-balance.lua script. For this

release, it keeps a counter of how many queries each backend has

executed and always picks the backend with the least number of

queries. Look at connect_server() and

pick_ro_backend_least_queries() for the code.

Important

For more information on MySQL Load Balancer, including how to be

included in the beta programme, contact

<[email protected]>.

For this alpha release, there are the following known issues:

Sometimes an assertion in

libeventfails when shutting down mysql-lb. The assertion failure occurs after all client and server connections have been closed already, thus is does not affect the normal operation of the program.When using UNIX domain sockets to specify backends, it logs errors like:

network-mysqld.c.1648: can't convert addr-type 1 into a stringThis is recorded as a Bug#35216 and will be fixed in the next release. The implication is that the backend address is not available in the Lua scripts, it does not impair normal operations of the program.

Important

For more information on MySQL Load Balancer, including how to be

included in the beta programme, contact

<[email protected]>.

The following section includes some common questions and answers for MySQL Load Balancer:

Questions

19.5.1: The current description says that the load balancer is for read-only operation. Does that mean that MySQL Load Balancer will not accept update statements for the slaves?

19.5.2: The MSQL Load Balancer is listed as being 'slave state aware'. Do you check the status of both threads in the replication process.

19.5.3: Is it possible to set the amount of acceptable lag?

19.5.4: Does MySQL Load Balancer handle load balancing based on CPU load, memory load or I/O load?

Questions and Answers

19.5.1: The current description says that the load balancer is for read-only operation. Does that mean that MySQL Load Balancer will not accept update statements for the slaves?

No. Currently, the MySQL Load Balancer doesn't prevent you from making modifications on the slaves. The read-only description is being used to indicate that you should only use this solution for sending quries to existing slave hosts.

19.5.2: The MSQL Load Balancer is listed as being 'slave state aware'. Do you check the status of both threads in the replication process.

Yes. the monitor module runs SHOW SLAVE

STATUS and checkes the status of the replication

process. If there is a problem, either because the slave has

lagged too far behind the master, or because the query

thread has stopped, then the slave will be taken out of the

list of available slaves for distributing queries.

19.5.3: Is it possible to set the amount of acceptable lag?

Yes, you can set the lag time by editing the time within the

load balancer Lua script. Edit the file

share/mysql-load-balancer/ro-balance.lua

and change the line:

max_seconds_lag = 10, -- 10 seconds

Altering the 10 seconds to the lag time that you want to support.

19.5.4: Does MySQL Load Balancer handle load balancing based on CPU load, memory load or I/O load?

Currently we use indirect measurements and balance the distribution of queries by looking at the replication status of the slave nodes. Since the distribution of work is written using Lua, it is possible to use a number of different criteria. Using more complex criteria will be possible in the future.